This is what software development looks like now

It's ... interesting?

My old colleague and personal hero Jared Short received a sponsorship from Typesense to build something interesting with their search engine. The thing he built somewhat took me aback. I asked him to write up his development process, stream-of-consciousness style, so we can all benefit from it. This is what the software engineering world looks like now. For better or for worse, there is no going back.

Jared’s words follow:

A comical application of AI and really fast search

I spent a couple days thinking about what to build, and after revisiting the Typesense feature list a few times, one idea stood out: semantic search using embeddings.

Quick primer on embeddings (skip this if you already know)

If you're not familiar with embeddings, they are a technique to represent data, especially complex or high-dimensional data, in a simpler, lower-dimensional form.

In the context of text, this means words that have similar meanings are mapped closer together in the vector space. For example, words like "vehicle,", "airplane," and "helicopter" would be placed nearer each other in the vector space, while they would be further from less related words like "pencil" or "telephone." This makes it easier for a machine to understand and process the relationships between different words. Let’s assume a 2-dimensional vector space (X, Y). Words like “vehicle” may be assigned a value like (2, 3) and “airplane” (2.2, 3.2) while a much less related “pencil” would be (5, 1).

Represented as a graph, vector search with those embeddings on the 2d space would look something like this.

You can see then how if you took similar words and a more dimensional space, most embedding models are hundreds or low single digit thousands right now. Searching for things like helicopters, we can use the dimensions to know that semantically, results like “transportation” are more relevant than “office”.

The project: “Relevant Brazeal”

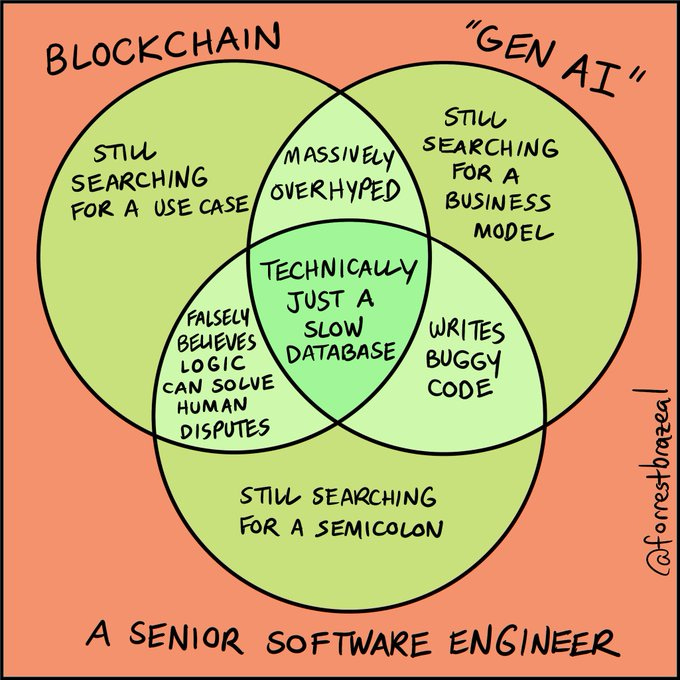

So I knew I wanted to use embeddings to search for something, but I still wasn’t sure what. While I was procrastinating, it just so happened that Forrest had recently posted one of his classic comics…

I also had been looking for one of his comics that I knew had a Titanic or something on it describing the hidden complexities of serverless computing. There we go. I wanted a semantic / ludicrously fast search over Forrest’s comics.

I also gave myself a side quest… since we are leaning into AI, just lean all the way in. I wanted to hand write as little code as possible to see for myself how far we really are these days with the whole “AI coder” thing.

I got permission from Forrest, he dumped me a JSON blob of his comics, and away we go.

Problem 1: Images might be worth 1000 words, but they don’t actually have words

The giant JSON blob mostly amounted to a title and link to an image. This turns out to be approximately zero use when it comes to any kind of search, let alone semantic search.

Well, I said we were going all in on AI. I popped open zed, my editor of choice because it is real fast and has pretty solid AI capabilities, and then prompted as follows:

> write a script that uses OpenAI gpt-4o to extract the captions and descriptions of a comic. It spit out some Python. I haven’t used Python for a couple years at this point. So naturally this was followed by:

> how do I setup a python project in 2024 > what is poetry> no, i mean python poetryEventually we got there. A few more prompts of “make this script take an argument of a file with a JSON blob with these properties”, and some fine tuning of the prompt of what parameters I wanted in the response, and we had some surprisingly solid results from nothing more than an image and fairly simple prompt.

Code / Input: GitHub Link

Output for this image:

{

"description_environment": "The image is a parody of a Jeopardy game show with a game board and contestants.",

"description_happening": "A person stands at a podium, asleep or tired, faced with a board of 'Engineering Jeopardy' questions. The board's categories are 'Design Decisions', 'Time Estimates', 'Tool Choices', 'Best Practices', and 'Performance', with corresponding monetary values. Many of the answers on the board are 'It Depends'. Three contestants are raising their hands frantically.",

"description_humor": "The humor arises from the absurdity of engineering questions often having the vague answer 'It Depends', poking fun at the complexity and uncertainty in the field of engineering.",

"caption": "ENGINEERING JEOPARDY; COSTS; DESIGN DECISIONS; TIME ESTIMATES; TOOL CHOICES; BEST PRACTICES; PERFORMANCE; IT DEPENDS; $200; $200; IT DEPENDS; $200; $200; $400; IT DEPENDS; $400; $400; IT DEPENDS; IT DEPENDS; $600; $600; $600; IT DEPENDS; $600; IT DEPENDS; $800; $800; IT DEPENDS; $800; $800; IT DEPENDS; IT DEPENDS; $1000; $1000; IT DEPENDS; $1000; $1000; @forrestbrazeal",

"tags": ["engineering", "jeopardy", "parody", "humor", "complexity"],

"suggested_alt_text": "A parody of Jeopardy called 'Engineering Jeopardy'. The board features categories like 'Design Decisions' and 'Performance' with many answers being 'It Depends'. A tired contestant stands at a podium while three contestants raise their hands eagerly.",

"title": "Engineering Jeopardy"

}

I was impressed, to say the least.

It had taken me maybe 15 minutes to get to this point.

I wrote zero original code so far. I did edit the prompt a bit to add some additional properties to the response and give a little bit of extra detail in what I expected (captions to be separated by a semi-colon but still be a single string).

Heck, I didn’t even pick the language the script was written in.

When I ran this, I realized it was going to take a little while to get a couple hundred of these images processed… so I simply prompted again:

> processing images is a bit slow, parallelize this to do it in batches of 10 and run all images in a batch in parallel.

> add progress tracking and time estimation so I know how long this will takeOn the first run, it imported some random lib and the script was crashing. Pretending I didn’t know why, I popped the error code in the chat assistant, it told me what to do and all was fixed.

Is it production-ready quality code and something I would be proud of? No. Does it work and do what I want? Seems like it. Plus it’s a script for something I wanted to tinker with. It’ll do.

Outside of the search usefulness, the AI also produced fairly good suggested_alt_text which could be useful for folks using screen readers to still get some of the description and possibly humor of the comic.

Problem 2: We have the data, now how do we search?

Popping open the Typesense documentation and searching (unsurprisingly powered by Typesense) for how to run it locally, there are a few options, and I opted for a quick docker run. A few moments later I had a local running Typesense instance. Refreshingly simple, and it also means I should be able run this on any container hosting platform and get an identical experience.

Now a story as a series of prompts:

Creating the script to index the collection

> Write a script that takes a JSON file path as an arg, gets the content, and writes it to a Typesense collection

> Make tags a faceted propertyNow at this point, it worked. Simple curl requests to the local endpoint worked, but only for words really found in the text. Looking for something like “titanic” didn’t return anything. This makes sense; I hadn’t yet enabled the “Hybrid” search mode of Typesense, which takes both a keyword and semantic search together to produce the best results.

The weakest part of this process was that Typesense doesn’t really provide much guidance on the “best” embeddings models to use or much guidance on any of the differences in their docs. I assume this is largely because this is an “it depends” type situation on what you should pick, depending on your text domain.

I was at a total loss for which model to use. After some quick searching and finding the Massive Text Embedding Benchmark, I ended up going with ts/gte-small since it seemed like a good tradeoff of size vs quality. I have no idea if that was a good choice or not, but the results will speak for themselves.

Enabling the embeddings to be computed was incredibly simple, just a simple edit to the collection creation script (I did do this manually instead of prompting) and then recreating the index and we were ready for semantic searches.

'fields': [

// … all the other fields

{

"name": "embedding",

"type": "float[]",

"embed": {

"from": [

"description_humor",

"description_environment",

"title"

],

"model_config": {

"model_name": "ts/gte-small"

}

}

}

]Creating the search “app”

This is what web app development looks like now:

> Write a single index.html file that uses instantsearch.js and typesense to provide a search over a collection, provide some basic clean styling and present results as a grid of images using the `url` property

> Add the title property to the top of each result item

> Add an instantsearch faceting component for the tags property, place it to the left of the image grid

> Provide a dark mode that respects the user browser / os settings

> Use the Cadillac of frontend, jQuery, to make each image grow to a reasonable readable size on hover

> Make each image click go to the reference url in a new tab

> Add a title and sub-header tags to the top of the pageI did make a few edits in the fairly readable produced code, like adding the title and links / info to the headers.

The ridiculous results

It turns out this all works better than I ever expected.

Not only was I able to fulfill my mission of finding the “comic with a Titanic in it”, but the semantic search is extraordinarily flexible. “Bird” picks out comics with chickens and penguins in it. “People waiting in line” picks out exactly what you’d expect. More exacting caption search is perfect. All of this searching works as quickly as I can type into the search bar, with results returning in milliseconds thanks to Typesense.

I threw everything up on GitHub Pages and you can play with it to your heart’s content. Once again, Typesense was kind enough to sponsor hosting the collection in their Typesense Cloud offering so I didn’t need to worry about managing anything to keep the demo alive long term.

You can also view all the source code here. Again, this isn’t production-quality code, it’s probably barely even re-usable code. But that wasn’t the point of this exercise!

I ended up walking away fairly impressed with the current state of things in AI, as well as the speed, ease of use, and developer-friendliness of Typesense. Starting from a JSON blob with a list of URLs to comic images, I was able to get incredibly fast, semantic search over that set of images, with minimal coding required from my end. All with a couple hours of prompting and reading some docs here and there.

So what’s the takeaway? Largely, I believe there is value for a developer to spend their time to push the bounds of what they think Gen AI can add to their workflow. Pick a toy project like this one, and challenge yourself to get as far as you can with prompting and iterating, without hand-jamming much code. Find the limits of what Gen AI can and can’t do (yet), and maybe discover some responsible ways to augment your day-to-day workflows.

Forrest here again. Thanks, Jared!

If you would enjoy getting sponsored to hack on interesting side projects like this, my cofounder Emily and I would love to hear from you.

Links and events

Speaking of generative AI, I recently appeared on Rob Collie’s Raw Data podcast, trying to work out my complicated feelings about AI.

Still speaking of generative AI, because aren’t we all, I will be premiering an absolutely ridiculous mini-musical about the types of ways AI makes us feel at Gene Kim’s ETLS event in August. I’ll be bringing a grand piano and all sorts of other nonsense to the Fontainebleau in Las Vegas. It should be … an experience. You should convince your boss to buy you a ticket. Say it’s for training.

On Monday I closed out Day 1 of the marvelous fwd:cloudsec conference with some brand-new songs about RTO, AI, and other things that make us sad.

Pluralsight, the longsuffering sponsor of the Cloud Resume Challenge, asked me to tell you about a new offer for challengers: if you post about the Cloud Resume Challenge on LinkedIn using hashtag #CloudResumeChallenge, and you tag Pluralsight as your learning platform, they will reach out to give you $50 in free AWS credits. I’m also told that if they really like what you said, they might even give you $1000 in credits. This seems like a pretty good offer to me. You should take them up on it.

Cartoon of the day

All this talk about Typesense and cartoons got me thinking…it’s time to bring back the Probably Wrong Flowcharts.

Excellent work! I learned a lot! Thank you!

I'm sure I'll be re-reading this several times over the next couple of months.